If in recent years the question I heard in boardrooms was: "What can we do with AI?", today it's much simpler: "Where's the money?"

This shift in focus isn't just a market perception; it's a financial warning. Recent data from Forrester indicates that most companies that bet on the rapid ROI of AI will be scaling back their investments this year.

The reason? They discovered the hard way that there's a huge difference between creating a nice "pilot" project and implementing AI on a company's legacy system without breaking the bank or violating compliance regulations .

The Brazilian scenario exacerbates this hangover. With the Selic rate under pressure and the cost of capital sky-high, the "cost of error" has become unaffordable. Innovation can no longer be treated as a hobby.

Either the technology impacts the bottom line (profit), or it will be cut from the budget before the second quarter.

I confess that, as a technology entrepreneur since the days of systems designed for monochrome monitors, I view this movement with relief. AI has never been a magic wand to inflate stock prices, although many have tried to use it that way.

It is, in essence, a workflow reengineering tool. And, as the latest data from MIT and Wharton demonstrate, only organizations that dare to redesign their end-to-end processes are capturing real and sustainable value.

For the rest, there remains the frustration of drivers who never get promoted and the burning of cash in a scenario of expensive capital.

Why AI “destroys” your balance sheet and yet you should still invest.

Many managers still ask me why their AI projects don't take off. The answer, more often than not, lies in the lack of a solid foundation.

Trying to implement advanced AI without organized data is like trying to race in Formula 1 with adulterated fuel. The car might be powerful, but it will sputter on the first lap.

There is a stark difference between "having AI" and "making money or gaining efficiency with AI." MIT released a paper last year that was widely circulated.

This article showed that, despite investments between US$30 billion and US$40 billion, 95% of organizations did not obtain a measurable return on investment (ROI) from their AI projects.

To understand what separates these winners from the rest, I decided to analyze the 5% who succeeded.

They don't just use AI to write emails faster. They use the technology to change how the company works; instead of just automating an old task, they question whether that task should even exist anymore.

But if the technology is revolutionary, why is there no clear measurement and why are margins being squeezed? The answer lies less in the technology and more in the structural mismatch between the AI economy, expectations inflated by social media, the lack of measurement in companies, and traditional accounting.

The first mistake is assuming that the value of AI only appears as new revenue or cost reduction; in practice, much of the ROI of AI is "invisible" to the classic income statement because it manifests as cost avoidance . And as someone from a service company, I affirm that "cost avoidance" is the only way to scale margins in the long term.

Consider a customer service department, one of the first major real-world success stories with AI. By using a chatbot, the existing team can manage 20% more requests without needing new employees; the real value generated is the "employee-not-hired" strategy.

However, accounting does not record "unpaid wages," and the cost of AI tools, tokens, and technology is sometimes higher than the cost of new employees, mainly due to currency exchange rate differences. The result is paradoxical: the company becomes more operationally efficient, but its short-term financial indicators worsen.

In the past, investing in technology meant buying servers and perpetual licenses (Capex - capital expansion). The money left the cash flow, but entered the balance sheet as an asset that depreciated slowly, protecting net income.

The AI economy has reversed this logic; infrastructure is now rented in the cloud, and consumption is continuous. Each interaction with the model is a recurring expense (Opex). Accountingly, this immediately reduces EBITDA and net income, while the asset base remains unchanged. Mathematically, this crushes ROE in the short term, making the company appear less efficient precisely when it is building its future competitive advantage.

There is no such thing as an account called "hires that weren't made" or "mistakes that weren't avoided."

How to escape the trap without getting caught up in the hype?

If the problem isn't the technology itself, but how we decide on, measure, and govern AI, then the correct question is no longer "how much does this return?" but "where does this make sense?"

This is precisely where the article "The Gen AI Playbook for Organizations ," from Harvard Business School, helps to organize executive thinking, offering a clear selection criterion.

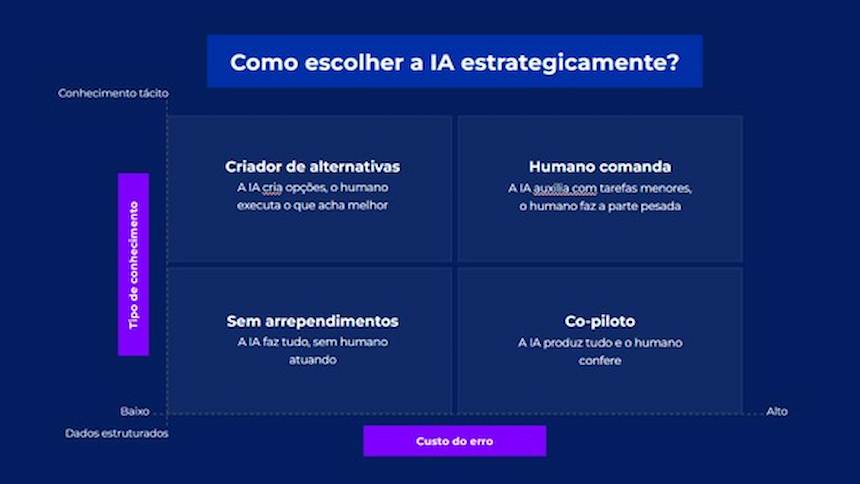

Just as we've been measuring things incorrectly, most companies are also trying to use AI in the wrong places. Instead of starting with the technology's capabilities, the starting point should be the nature of the task and the cost of error associated with it.

This creates a strategic matrix (image below) that separates the wheat from the chaff and protects your cash flow:

Not all work is the same; some tasks rely heavily on tacit knowledge, human judgment, context, accumulated experience, and political awareness of the environment. Others are based on explicit data, clear rules, repetitive patterns, and high volume.

1. The "no regrets" zone: low error cost, structured data. Here's where the quick efficiency gains that ease Opex are: tasks like initial resume screening, drafting operational emails, summarizing meetings, etc.

The first major initiative I led using generative AI was a selection process with over 4,800 applicants. We used AI for the initial screening, and based on HR's criteria, we selected the top 200, assigning scores and explaining the reasons behind those scores.

Unilever recently announced that with a similar tool, it reduced the hiring time from 4 months to 4 weeks!

Therefore, in this quadrant 1, the plan is to go straight to complete automation! AI does it, nobody needs to review every comma, the ROI is immediate and easy, as it frees up team time.

2. Co-pilot mode: high error cost, structured data. Think of programming or legal contracts. AI generates the code or draft in seconds, but an error here can stop the software or trigger a lawsuit.

Law firms have started seeing results with contract generators trained from their own database, using their writing style and with all the security of an SLM (Small Language Models, compact and specific versions of traditional LLMs).

The plan in this quadrant is to let AI produce while humans review! You gain speed but maintain security, and technical productivity skyrockets.

3. The creator of alternatives: low cost of error, tacit knowledge. Marketing brainstorming, design ideas, slogans... The "error" here is simply a bad idea that can be discarded.

Now, in Christmas 2025, Coca-Cola, in partnership with OpenAI and Bain & Company, launched the Create Real Magic platform. The result: more than 1 million users in 43 markets interacted with the tool, generating thousands of creative variations.

In this case, AI works very well to generate volume and options, then the human chooses the best one. This increases creativity without financial risk.

4. The "human commands" zone: high cost of error, tacit knowledge. This is where companies have recently lost hundreds of millions of dollars, leaving strategic decisions, layoffs, complex medical diagnoses, or high-risk investments to AI, and this should NEVER be done.

In this case, AI is merely a research assistant; it needs to exist to take irrelevant work away from the incredible people in the organization. The final decision will always be human. Trying to replace people in this area to "improve the balance sheet" is the quickest recipe for destroying the company's long-term value.

The solution will not be technical either.

AI projects fail more often due to incorrect metrics than technical failures. Evaluating AI solely by direct and immediate ROI is an efficient way to kill promising initiatives early on; well-governed projects use clear intermediate metrics: cycle time, volume processed, actual adoption rate, exception reduction, and system learning over time.

ROI becomes a consequence, not a criterion for early survival.

For leadership, the challenge is twofold: first, accepting that traditional accounting (income statement) will not capture everything that is relevant; second, having the discipline to apply AI in the right areas of the matrix above. We have to stop asking "how much does this AI return," and start looking at: "what type of task is it being applied to and what is the real cost of error?"

The challenge remains significant, but I see signs pointing the way. Trying to automate the strategy is entering the high-risk quadrant of the Harvard matrix; on the other hand, ignoring the automation of the basics risks failure due to inefficiency.

The secret is not to stop investing, but to know how to steer the business, because AI doesn't destroy balance sheets, it only exposes, and often relentlessly, poorly structured decisions.

*Henrique de Castro is the CEO of New Rizon, a data scientist specializing in AI from MIT, holds an executive MBA from Insper, and is pursuing a master's degree in management for competitiveness from FGV.